GPT3 is out in private beta and has been buzzing in social media lately. GPT3 has been made by Open AI, which was founded by Elon Musk, Sam Altman and others in 2015. Generative Pre-trained Transformer 3 (GPT3) is a gigantic model with 175 billion parameters. In comparison the previous version GPT2 had 1.5 billion parameters. The larger more complex model enables GPT3 to do things that weren’t previously possible.

Applications of GPT3

A better model opens up a lot of new possibilities. GPT-3 has been trained with 45TB of data gleaned from the internet. The applications of this model are immense. Twitter is abuzz with all initial results.

Medical Diagnosis

The model has been able to generate relevant medical text about obscure medical terms as seen in the tweet below.

Programming

Another application that is driving significant interest is in its direct usage in software. The demo below shows how GPT-3 is able to interpret python code and tell what it is doing. This will be a major productivity boost as everyone knows how much programmers hate reading somebody else’s code. Imagine a tool that comments all of your available code, including those written by engineers who left your organisation long ago.

GPT-3 has also been able to generate code based on text. The demo below shows JSX being generated based on text description.

The demos above does seem mind blowing but it is not going to replace programmers. GPT3 can power assistants that help programmers get faster and more efficient.

Writers, bloggers and journalists

GPT-3 is able to churn out text with only a few words of input. It could be fed a series of financial results and it could make a short article sumarizing the results. I could in principle feed the algorithm a few blogs and articles about any topic say GPT-3 and it could give me another article summarising it. People are exploring whether GPT-3 can be used to build a tool that helps writers get over writer’s block.

Check this site. It demostrates creative writing by GPT-3 with poetry, articles, puns etc.

Customer Service

Chat bots have been used extensively by multiple companies. It is used mostly as a filter to incoming customer queries. With additional capabilities, we will see these chatbots becoming capable of solving more customer issues. Studies have shown that people prefer talking to humans than chatbots. With bots getting more realistic, more and more people would be unable to see the difference. Automated bot will manage an increasing proportion of the queries.

GPT3 under the hood

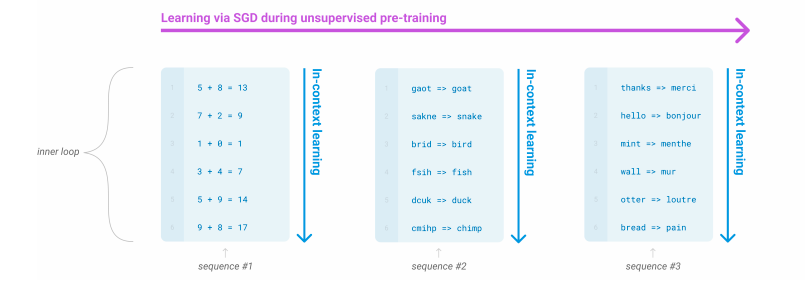

The GPT3 paper ‘Language Models are few short Learners’ was released a little earlier. It gives a deeper insight into how the model was built. NLP models have done substantially well for a while. The other models are typically task agnostic. For specific tasks they need to be re-trained or fine tuned with large datasets. This is quite different from humans. For e.g. we can specify a human child 3 examples of act of kindness. She can tell whether the 4th example is an act of kindness or not. Language for humans is a few shot learning process. This is not true for most models.

GPT3 has demonstrated outstanding NLP capabilities with only a few examples for a particular task. With its large model size and huge training dataset, it has built excellent in-context generalisation.

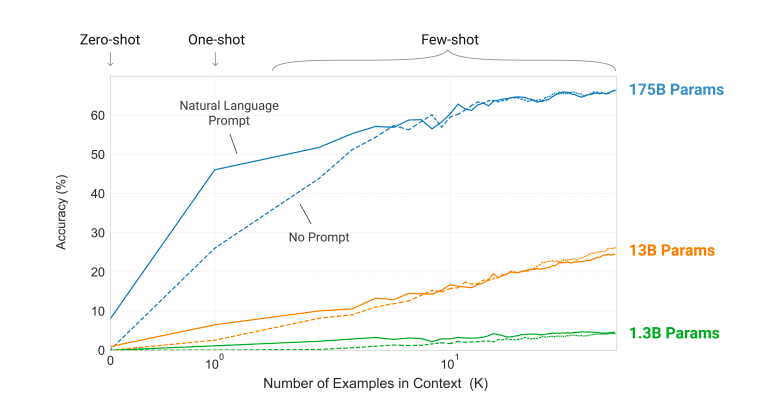

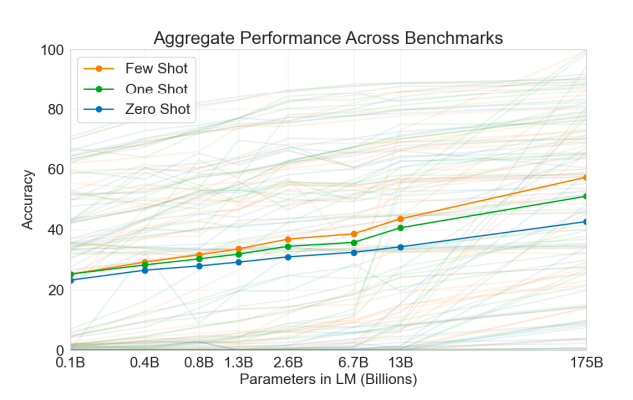

GPT3 has 175 billion parameters. In comparison GPT-2 had 1.5 billion, the Google and Facebook equivalents have around 10 billion. Humans have 85 billion neurons in our brains. GPT3 is quite big and the in-context learning slopes are quite steeper for larger models.

Size Does Matter

A large model means that small research teams or companies will not be able to implement this model for themselves. This article estimates that the training process would have required 350 GB memory and cost $12.6 million. Very few companies or researchers would have access to such resources. It also makes it difficult for them to compete. We don’t know what GPT4 or the response from the tech heavyweights like Google, Facebook will look like. Maybe this will trigger a size race.

GPT3 Release

Open AI has not open sourced this model. It is currently is active beta and if you are interested you can sign up for it. Open AI is obviously controlling the release process. The cherry picked snippets of it demos have created the buzz they intended to. It also gives Open AI to track the negative feedback and it unsavoury usage. A model this powerful is a great propaganda tool. Its capability to create content which is almost human like will be of interest to organizations aiming to influence public opinion. Twitter bots will seems almost human.

We still don’t know what this API will be priced like when it releases. It is unlikely to be very cheap considering the amount of compute a 175 billion weighted model will take. Open AI is probably also looking at ways to monetize the research they are doing. For now we can only speculate.

GPT3 is good beyond doubt. There are several downsides and scope for improvement. The authors of the paper have acknowledged the same. GPT3 has been trained with almost the entire internet. So it has also picked up the biases and prejudices that plague humanity. Like all technologies it can be used for good and bad. I am going to trust our better angels that it will be more good than bad.