We all know what’s ruling technology right now.

Yes, it is Artificial Intelligence, Machine Learning, Data Science, and Data Engineering.

Therefore, now is the time to propel your Data Science career. Look no further because you can enroll for a PG Certificate Course in Data Science from IIT Roorkee. To make enrolment easy for you, here’s a Free Scholarship Test you can take and earn discounts up to Rs.75,000!

The Scholarship Test is a great opportunity for you to earn discounts. There are 50 questions that you have to attempt in one hour.

Each question you answer correctly earns you a discount of Rs 1000, and you can earn a maximum discount of Rs 75,000! (50/50 rewards you with an additional 25000 scholarship)

This Scholarship Test for the Data Science course is a great way to challenge yourself in basic aptitude and basic programming questions and to earn a massive discount on the course fees.

The PG Certificate course from IIT Roorkee covers all that you need to know in technology right now. You will learn the architecture of ChatGPT, Stable Diffusion, Machine Learning, Artificial Intelligence, Data Science, Data Engineering and more! The course will be delivered by Professors from IIT Roorkee and industry experts and follows a blended mode of learning. Learners will also get 365 days of access to cloud labs for hands-on practice in a gamified learning environment.

Data Scientists, Data Engineers, Data Architects are some of the highly sought after professionals today. With businesses and life-changing innovations being data driven in every domain, the demand for expertise in Deep Learning, Machine Learning is on the rise. This PG Certificate Course gives you the skills and knowledge required for a propelling career in Data Science.

So what are you waiting for? Seats to the PG Certificate Course in Data Science from IIT Roorkee are limited. Take the Scholarship Test, earn discounts, and enroll now.

Link to the Scholarship Test is here.

Details about the PG Certificate Course in AI, Machine Learning, and Data Science are here.

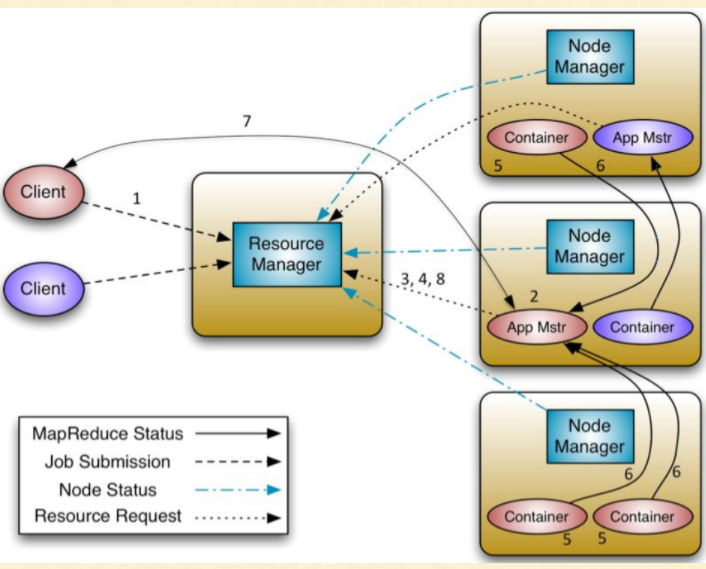

![How to design a large-scale system to process emails using multiple machines [Zookeeper Use Case Study]?](https://cloudxlab.com/blog/wp-content/uploads/2021/05/Untitled-drawing-4.png)