[This blog is from KnowBigData.com. It is pretty old. Many things have changed since then. People have moved to MLLib. We have also moved to CloudxLab.com.]

What is Machine Learning?

Machine Learning is programming computers to optimize a Performance using example data or past experience, it is a branch of Artificial Intelligence.

Types of Machine Learning

Machine learning is broadly categorized into three buckets:

- Supervised Learning – Using Labeled training data, to create a classifier that can predict the output for unseen inputs.

- Unsupervised Learning – Using Unlabeled training data to create a function that can predict the output.

- Semi-Supervised Learning – Make use of unlabeled data for training – typically a small amount of labeled data with a large amount of unlabeled data.

Machine Learning Applications

- Recommend Friends, Dates, Products to end-user.

- Classify content into pre-defined groups.

- Find Similar content based on Object Properties.

- Identify key topics in large Collections of Text.

- Detect Anomalies within given data.

- Ranking Search Results with User Feedback Learning.

- Classifying DNA sequences.

- Sentiment Analysis/ Opinion Mining

- Computer Vision.

- Natural Language Processing,

- BioInformatics.

- Speech and HandWriting Recognition.

Mahout

Mahout – Keeper/Driver of Elephants. Mahout is a Scalable Machine Learning Library built on Hadoop, written in Java and its Driven by Ng et al.’s paper “MapReduce for Machine Learning on Multicore”. Development of Mahout Started as a Lucene sub-project and it became Apache TLP in Apr’10.

- Introduction to Machine Learning and Mahout

- Machine Learning- Types

- Machine Learning- Applications

- Machine Learning- Tools

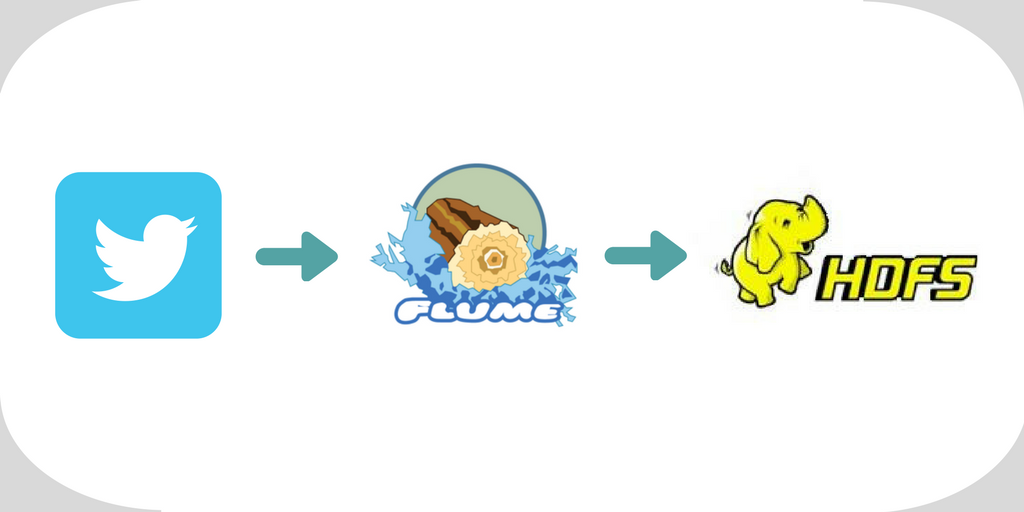

- Mahout – Recommendation Example

- Mahout – Use Cases

- Mahout Live Example

- Mahout – Other Recommender Algos

Machine Learning with Mahout Presentation

Machine Learning with Mahout Videohttps://www.youtube.com/embed/PZsTLIlSZhI