During one of the keynote speeches in India, an elderly person asked a question: why don’t we use Sanskrit for coding in AI. Though this question might look very strange to researchers at first it has some deep background to it.

Long back when people were trying to build language translators, the main idea was to have an intermediate language to and from which we could translate to any language. If we build direct translation from a language A to B, there will be too many permutations. Imagine, we have 10 languages, and we will have to build 90 (10*9) such translators. But to come up with an intermediate language, we would just need to encode for every 10 languages and 10 decoders to convert the intermediate language to each language. Therefore, there will be only 20 models in total.

So, it was obvious that there is definitely a need for an intermediate language. The question was what should be the intermediate language. Some scientists proposed that we should have Sanskrit as the intermediate language because it had good definitive grammar. Some scientists thought a programming language that can dynamically be loaded should be better and they designed a programming language such as Lisp. Soon enough, they all realized that both natural languages and programming languages such as Lisp would not suffice for multiple reasons: First, there may not be enough words to represent each emotion in different languages. Second, all of this will have to be coded manually.

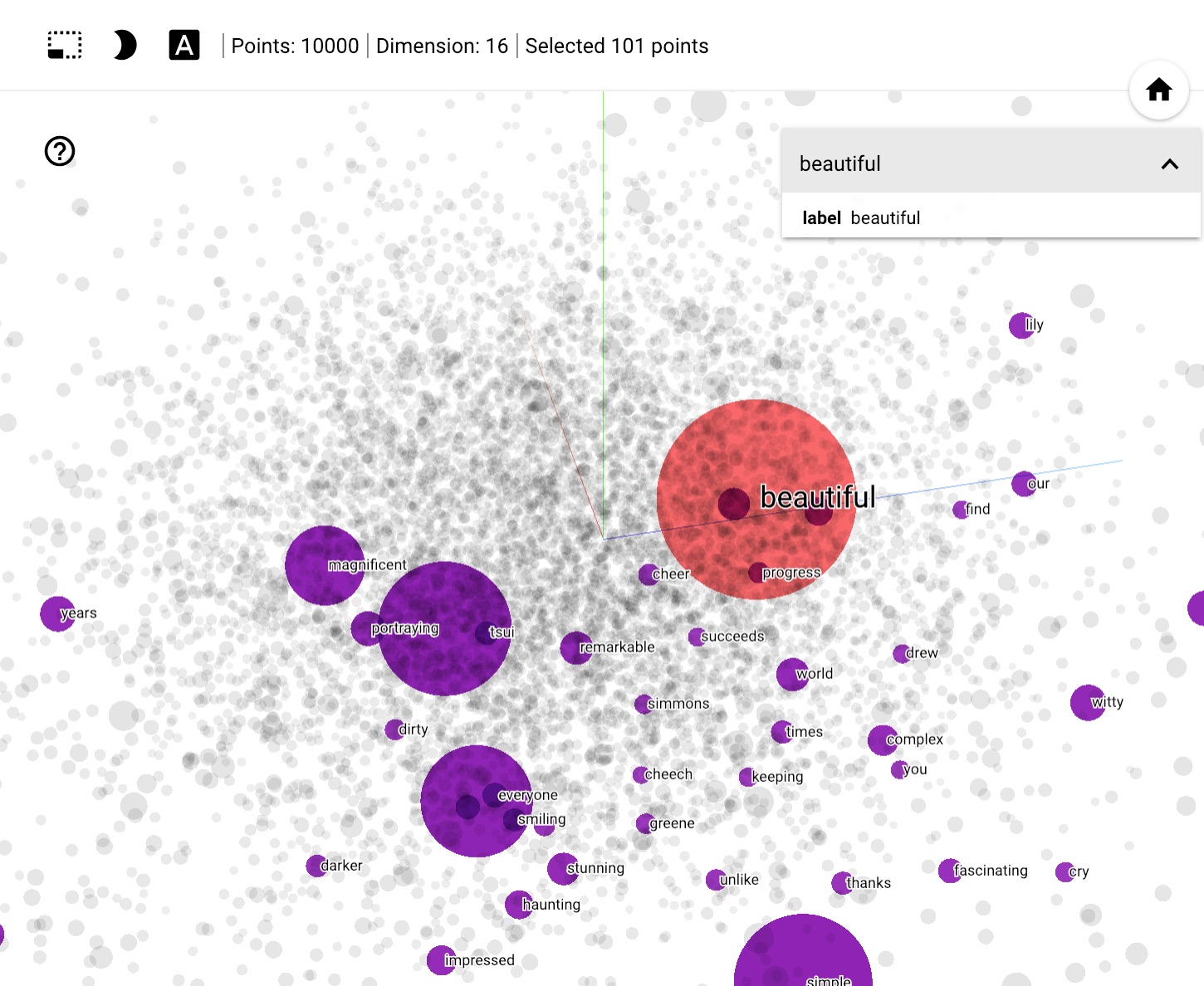

The approach that became successful was the one in which we represent the intermediate language as a list of numbers along with a bunch of numbers that represent the context. Also, instead of manually coding the meaning of each word, the idea that worked out was representing a word or a sentence with a bunch of numbers. This approach is fairly successful. This idea of representing words as a list of numbers has brought a revolution in natural language understanding. There is humongous research that is happening in this domain today. Please check GPT-3, Dall-E, and Imagen.

If you subtract woman from Queen and add Man, what should be the result? It should be King, right? This can be easily demonstrated using word embedding.

Queen — woman + man = King

Similarly, Emperor — man + woman = Empress

Yes, this works. Each of these words is represented by a list of numbers. So, we are truly able to represent the meaning of words with a bunch of numbers. If you think about it, we learned the meaning of each word in our mother tongue without using a dictionary. Instead, we figured the meaning out using the context.

In our mind, we have sort of a representation of the word which is definitely not in the form of some other natural language. Based on the same principles, the algorithms also figure out the meaning of the words in terms of a bunch of numbers. It is very interesting to understand how these algorithms work. They work on similar principles to humans. They go through the large corpus of data such as Wikipedia or news archives and figure out the numbers with which each word can be represented. The problem is optimization: come up with those numbers to represent each word such that the distance between the words existing in a similar context is very small as compared to the distance between the words existing in different contexts.

The word Cow is closer to Buffalo as compared to Cup because Cow and buffalo usually exist in similar contexts in sentences.

So, in summary, it is very unreasonable to pursue that we should still be considering a natural language to represent the meaning of a word or sentence.

I hope this makes sense to you. Please post your opinions in the comments.