Whenever we have our live talks of CloudxLab, in presentations or in a conference, we want to live stream and record it. The main challenge that occurs is the presenter gets out of focus as the presenter moves. And for us, hiring a cameraman for three hours of a session is not a viable option. So, we thought of creating an AI-based pan and tilt platform which will keep the camera focussed on speaker.

So, Here are the step-by-step instructions to create such a camera along with the code needed.

The result

This is how the output looks like – You will notice the camera rotate as the person move. It is just the beginning. There are a lot of possibilities. And yes, probably get a better video 🙂

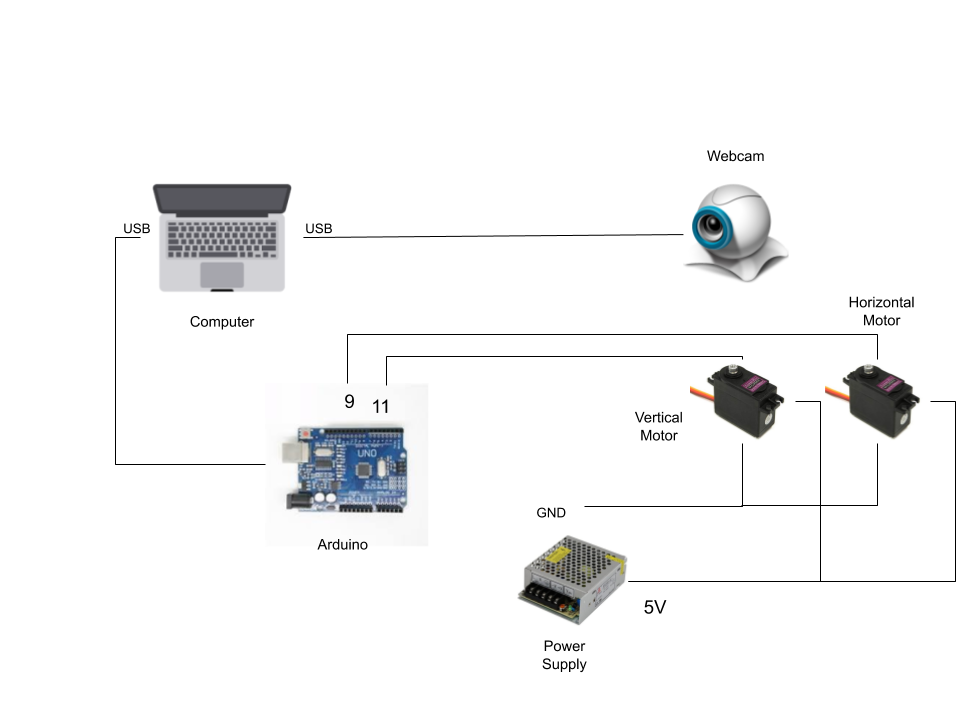

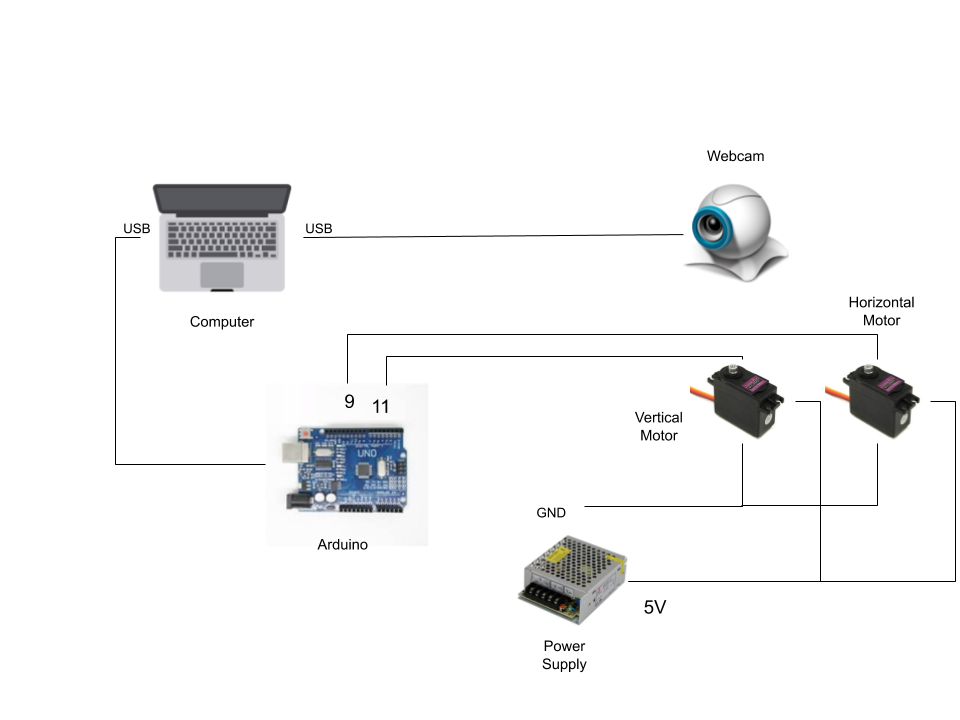

The Setup

If you are planning to build this, you would need the following hardware:

- A computer

- A webcam

- Pan-tilt platform (see https://www.servocity.com/kits/rotary-motion-kits/pan-tilt-kits)

- Motors that can lift the webcam and pan-tilt platform. I ended up buying three different kinds of the motor only to realize that all of them weak.

- Power Supply. The powerful motors need extra supply can’t run on Arduino. I needed 5amps 5V power supply.

- An Arduino Board

And for the software, you need the following:

- Python3

- OpenCV

- This GitHub repository – https://github.com/cloudxlab/facechase

- Read the README.ME of the repository to know how to run the program

And the hardware need to be connected in the following way.

How does it work?

The way it works is:

- Read image from the USB camera

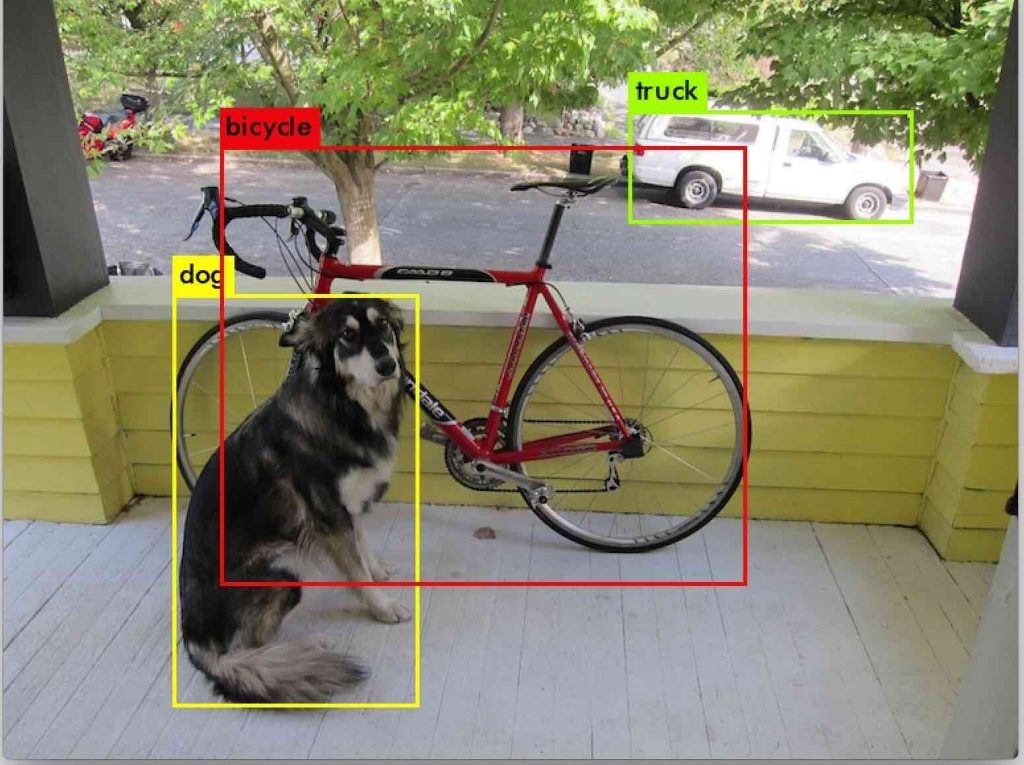

- Using YOLO, using a pre-trained model, we identify the object in the image

- Keep only the persons

- For all the persons pick the one which has the biggest bounding box

- Based on how far is the centre of the bounding box from the centre of the main image, rotate the motors

- Sleep for some time, go back to step 1

Where is AI in it?

But where is AI in all of this? In step #2, we are using YOLO v3. YOLO is a breakthrough in object detection using Convolutional Network specifically the bounding box detection. Bounding Box detection means that while identifying objects we identify the rectangle that surrounds the object.

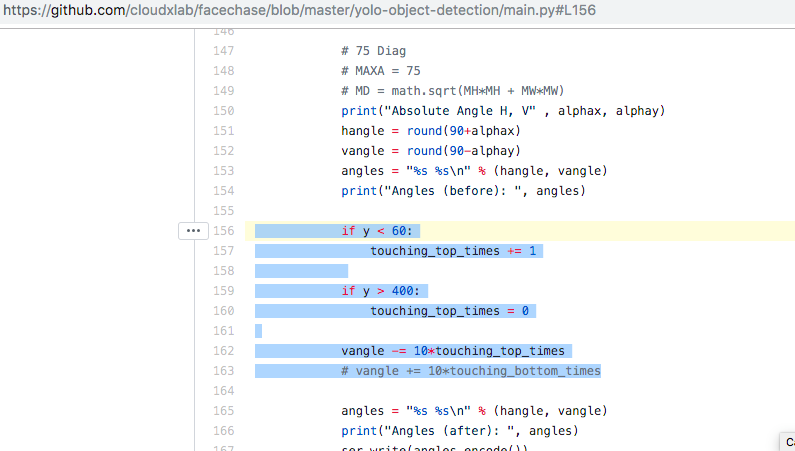

One of the interesting challenges that I faced during its implementation was that when the camera is focussing on the lower part of the body and the bounding box is covering the entire height, the camera would not move up. So, for that, I put a hack. If the bounding box is almost touching the top, rotate the camera up (See the code).

That’s pretty much.

Areas of Improvement

There are many areas of improvement in this setup:

- We can use stepper motors to make it move faster

- Right now, the object identification is taking approx 1sec, we can make it faster by trimming the model, using a GPU or utilizing the other object tracking mechanisms.

As a closing note, the possibilities of such a tool are huge, you could make a photographer that learns how to find the angle of maximum variance. Or maybe you can build a point-and-shoot gun which can be used in the defence.

[Big thanks to Ujjwal John for setting up the hardware. He has got amazing skills in hardware hacking!]