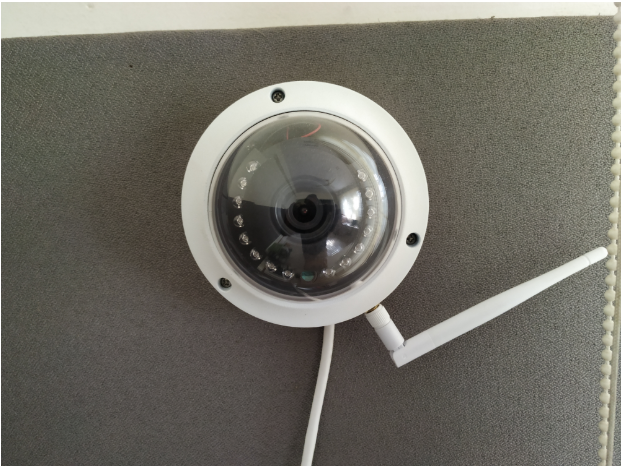

In this blog we explore how to run a very popular computer vision algorithm YOLO on a CCTV live feed. YOLO (You Only Look Once) is a very popular object detection, remarkably fast and efficient. There is a lot of documentation on running YOLO on video from files, USB or raspberry pi cameras. This series of blogs, describes in details how to setup a generic CCTV camera and run YOLO object detection on the live feed. In case you are interested in finding more about YOLO, I have listed out a few articles for your perusal at the end of this blog.

Setup a CCTV with RTSP

This blog lists out in details methods to setup a generic CCTV camera with a live RTSP feed. Note the RTSP url, as we will need it in the later stages. The RTSP (

Install Python and OPENCV

We will use Python 3.6 and openCV 4 in this walkthrough. Ensure you have a computer that has both and the appropriate versions. In case you have never installed OPENCV, please refer to this guide. It documents the installation of OPENCV on several different operating systems

Install virtualenv for managing python libraries

I strongly recommend you to use virtualenv to manage your python development workflows, especially if you work on multiple python projects simultaneously. For more details on this package refer to documentation.

pip3 install virtualenvwrapper

mkvirtualenv env1

Install necessary python libraries with pip

We will need the following libraries to run YOLO on a live CCTV feed. The required libraries can be installed using the command below.

pip3 install numpy imutils time cv2 os Download the YOLOv3 weights and config files

The weights, config and names files to run Yolo v3 can be downloaded from the Darknet website. Make a directory called yolo-coco and keep the files there.

Python code

Open a file called python-yolo-cctv.py and copy the following code there. Replace the string <RTSP_URL> with the RTSP url for your camera.

# import the necessary packages

import numpy as np

import argparse

import imutils

import time

import cv2

import os

from imutils.video import FPS

from imutils.video import VideoStream

RTSP_URL=<RTSP URL>

YOLO_PATH="yolo-coco"

OUTPUT_FILE="output/outfile.avi"

# load the COCO class labels our YOLO model was trained on

labelsPath = os.path.sep.join([YOLO_PATH, "coco.names"])

LABELS = open(labelsPath).read().strip().split("\n")

CONFIDENCE=0.5

THRESHOLD=0.3

# initialize a list of colors to represent each possible class label

np.random.seed(42)

COLORS = np.random.randint(0, 255, size=(len(LABELS), 3),

dtype="uint8")

# derive the paths to the YOLO weights and model configuration

weightsPath = os.path.sep.join([YOLO_PATH, "yolov3.weights"])

configPath = os.path.sep.join([YOLO_PATH, "yolov3.cfg"])

# load our YOLO object detector trained on COCO dataset (80 classes)

# and determine only the *output* layer names that we need from YOLO

print("[INFO] loading YOLO from disk...")

net = cv2.dnn.readNetFromDarknet(configPath, weightsPath)

ln = net.getLayerNames()

ln = [ln[i[0] - 1] for i in net.getUnconnectedOutLayers()]

# initialize the video stream, pointer to output video file, and

# frame dimensions

vs = cv2.VideoCapture(RTSP_URL)

time.sleep(2.0)

fps = FPS().start()

writer = None

(W, H) = (None, None)

cnt=0

# loop over frames from the video file stream

while True:

cnt+=1

# read the next frame from the file

(grabbed, frame) = vs.read()

# if the frame was not grabbed, then we have reached the end

# of the stream

if not grabbed:

break

# if the frame dimensions are empty, grab them

if W is None or H is None:

(H, W) = frame.shape[:2]

# construct a blob from the input frame and then perform a forward

# pass of the YOLO object detector, giving us our bounding boxes

# and associated probabilities

blob = cv2.dnn.blobFromImage(frame, 1 / 255.0, (416, 416),

swapRB=True, crop=False)

net.setInput(blob)

start = time.time()

layerOutputs = net.forward(ln)

end = time.time()

# initialize our lists of detected bounding boxes, confidences,

# and class IDs, respectively

boxes = []

confidences = []

classIDs = []

# loop over each of the layer outputs

for output in layerOutputs:

# loop over each of the detections

for detection in output:

# extract the class ID and confidence (i.e., probability)

# of the current object detection

scores = detection[5:]

classID = np.argmax(scores)

confidence = scores[classID]

# filter out weak predictions by ensuring the detected

# probability is greater than the minimum probability

if confidence > CONFIDENCE:

# scale the bounding box coordinates back relative to

# the size of the image, keeping in mind that YOLO

# actually returns the center (x, y)-coordinates of

# the bounding box followed by the boxes' width and

# height

box = detection[0:4] * np.array([W, H, W, H])

(centerX, centerY, width, height) = box.astype("int")

# use the center (x, y)-coordinates to derive the top

# and and left corner of the bounding box

x = int(centerX - (width / 2))

y = int(centerY - (height / 2))

# update our list of bounding box coordinates,

# confidences, and class IDs

boxes.append([x, y, int(width), int(height)])

confidences.append(float(confidence))

classIDs.append(classID)

# apply non-maxima suppression to suppress weak, overlapping

# bounding boxes

idxs = cv2.dnn.NMSBoxes(boxes, confidences, CONFIDENCE,

THRESHOLD)

# ensure at least one detection exists

if len(idxs) > 0:

# loop over the indexes we are keeping

for i in idxs.flatten():

# extract the bounding box coordinates

(x, y) = (boxes[i][0], boxes[i][1])

(w, h) = (boxes[i][2], boxes[i][3])

# draw a bounding box rectangle and label on the frame

color = [int(c) for c in COLORS[classIDs[i]]]

cv2.rectangle(frame, (x, y), (x + w, y + h), color, 2)

text = "{}: {:.4f}".format(LABELS[classIDs[i]],

confidences[i])

cv2.putText(frame, text, (x, y - 5),

cv2.FONT_HERSHEY_SIMPLEX, 0.5, color, 2)

# check if the video writer is None

if writer is None:

# initialize our video writer

fourcc = cv2.VideoWriter_fourcc(*"MJPG")

writer = cv2.VideoWriter(OUTPUT_FILE, fourcc, 30,

(frame.shape[1], frame.shape[0]), True)

# write the output frame to disk

writer.write(frame)

# show the output frame

cv2.imshow("Frame", cv2.resize(frame, (800, 600)))

key = cv2.waitKey(1) & 0xFF

#print ("key", key)

# if the `q` key was pressed, break from the loop

if key == ord("q"):

break

# update the FPS counter

fps.update()

# stop the timer and display FPS information

fps.stop()

print("[INFO] elasped time: {:.2f}".format(fps.elapsed()))

print("[INFO] approx. FPS: {:.2f}".format(fps.fps()))

# do a bit of cleanup

cv2.destroyAllWindows()

# release the file pointers

print("[INFO] cleaning up...")

writer.release()

vs.release()

The program is now ready to run. The live feed from the camera is fed via RTSP. Each frame is run through the YOLO object detector and identified items are highlighted as can be seen below. The program can be stopped by pressing the key ‘q’ at any time.

Final Notes

I ran this program on my non-GPU MacAir laptop, with an FPS of 1. Using a GPU or an accelerator the FPS can be increased significantly to achieve a real time full FPS object detection. Alternatively, you can choose run every 10th or 20th frame in case you don’t have a GPU acceleration.

REFERENCES

We also have an offer for you!

Flat 75% Off + Additional 25% Off + 30-days Extra Lab

Please use the coupon code LD25 during checkout to avail the above offer. Please note that this is a limited time offer and may expire any time soon.

This offer is available for ALL courses (including EICT, IIT Roorkee Certification Courses) available on CloudxLab.com.

Enroll in the most sought-after courses such as AI/ML, Data Science, DevOps, Big Data, Deep Learning, Python and more!