You can run PySpark code in Jupyter notebook on CloudxLab. The following instructions cover 2.2, 2.3, 2.4 and 3.1 versions of Apache Spark.

What is Jupyter notebook?

The IPython Notebook is now known as the Jupyter Notebook. It is an interactive computational environment, in which you can combine code execution, rich text, mathematics, plots and rich media. For more details on the Jupyter Notebook, please see the Jupyter website.

Please follow below steps to access the Jupyter notebook on CloudxLab

To start python notebook, Click on “Jupyter” button under My Lab and then click on “New -> Python 3”

This code to initialize is also available in GitHub Repository here.

For accessing Spark, you have to set several environment variables and system paths. You can do that either manually or you can use a package that does all this work for you. For the latter, findspark is a suitable choice. It wraps up all these tasks in just two lines of code:

import findspark

findspark.init('/usr/spark2.4.3')Here, we have used spark version 2.4.3. You can specify any other version too whichever you want to use. You can check the available spark versions using the following command-

!ls /usr/spark*If you choose to do the setup manually instead of using the package, then you can access different versions of Spark by following the steps below:

If you want to access Spark 2.2, use below code:

import os

import sys

os.environ["SPARK_HOME"] = "/usr/hdp/current/spark2-client"

os.environ["PYLIB"] = os.environ["SPARK_HOME"] + "/python/lib"

# In below two lines, use /usr/bin/python2.7 if you want to use Python 2

os.environ["PYSPARK_PYTHON"] = "/usr/local/anaconda/bin/python"

os.environ["PYSPARK_DRIVER_PYTHON"] = "/usr/local/anaconda/bin/python"

sys.path.insert(0, os.environ["PYLIB"] +"/py4j-0.10.4-src.zip")

sys.path.insert(0, os.environ["PYLIB"] +"/pyspark.zip")If you plan to use 2.3 version, please use below code to initialize

import os

import sys

os.environ["SPARK_HOME"] = "/usr/spark2.3/"

os.environ["PYLIB"] = os.environ["SPARK_HOME"] + "/python/lib"

# In below two lines, use /usr/bin/python2.7 if you want to use Python 2

os.environ["PYSPARK_PYTHON"] = "/usr/local/anaconda/bin/python"

os.environ["PYSPARK_DRIVER_PYTHON"] = "/usr/local/anaconda/bin/python"

sys.path.insert(0, os.environ["PYLIB"] +"/py4j-0.10.7-src.zip")

sys.path.insert(0, os.environ["PYLIB"] +"/pyspark.zip")If you plan to use 2.4 version, please use below code to initialize

import os

import sys

os.environ["SPARK_HOME"] = "/usr/spark2.4.3"

os.environ["PYLIB"] = os.environ["SPARK_HOME"] + "/python/lib"

# In below two lines, use /usr/bin/python2.7 if you want to use Python 2

os.environ["PYSPARK_PYTHON"] = "/usr/local/anaconda/bin/python"

os.environ["PYSPARK_DRIVER_PYTHON"] = "/usr/local/anaconda/bin/python"

sys.path.insert(0, os.environ["PYLIB"] +"/py4j-0.10.7-src.zip")

sys.path.insert(0, os.environ["PYLIB"] +"/pyspark.zip")Now, initialize the entry points of Spark: SparkContext and SparkConf (Old Style)

from pyspark import SparkContext, SparkConf

conf = SparkConf().setAppName("appName")

sc = SparkContext(conf=conf)Once you are successful in initializing the sc and conf, please use the below code to test

rdd = sc.textFile("/data/mr/wordcount/input/")

print(rdd.take(10))

print(sc.version)You can initialize spark in spark2 (or dataframe) way as follows:

# Entrypoint 2.x

from pyspark.sql import SparkSession

spark = SparkSession.builder.appName("Spark SQL basic example").enableHiveSupport().getOrCreate()

sc = spark.sparkContext

# Now you even use hive

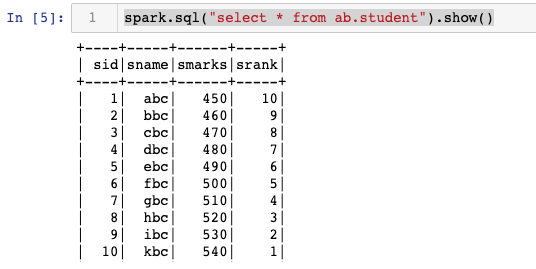

# Here we are querying the hive table student located in ab

spark.sql("select * from ab.student").show()

# it display something like this:

You can also initialize Spark 3.1 version, using the below code

import findspark

findspark.init('/usr/spark-3.1.2')