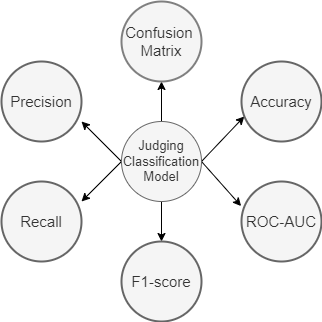

In this blog, we will discuss about commonly used classification metrics. We will be covering Accuracy Score, Confusion Matrix, Precision, Recall, F-Score, ROC-AUC and will then learn how to extend them to the multi-class classification. We will also discuss in which scenarios, which metric will be most suitable to use.

First let’s understand some important terms used throughout the blog-

True Positive (TP): When you predict an observation belongs to a class and it actually does belong to that class.

True Negative (TN): When you predict an observation does not belong to a class and it actually does not belong to that class.

False Positive (FP): When you predict an observation belongs to a class and it actually does not belong to that class.

False Negative(FN): When you predict an observation does not belong to a class and it actually does belong to that class.

All classification metrics work on these four terms. Let’s start understanding classification metrics-

Continue reading “Classification metrics and their Use Cases”