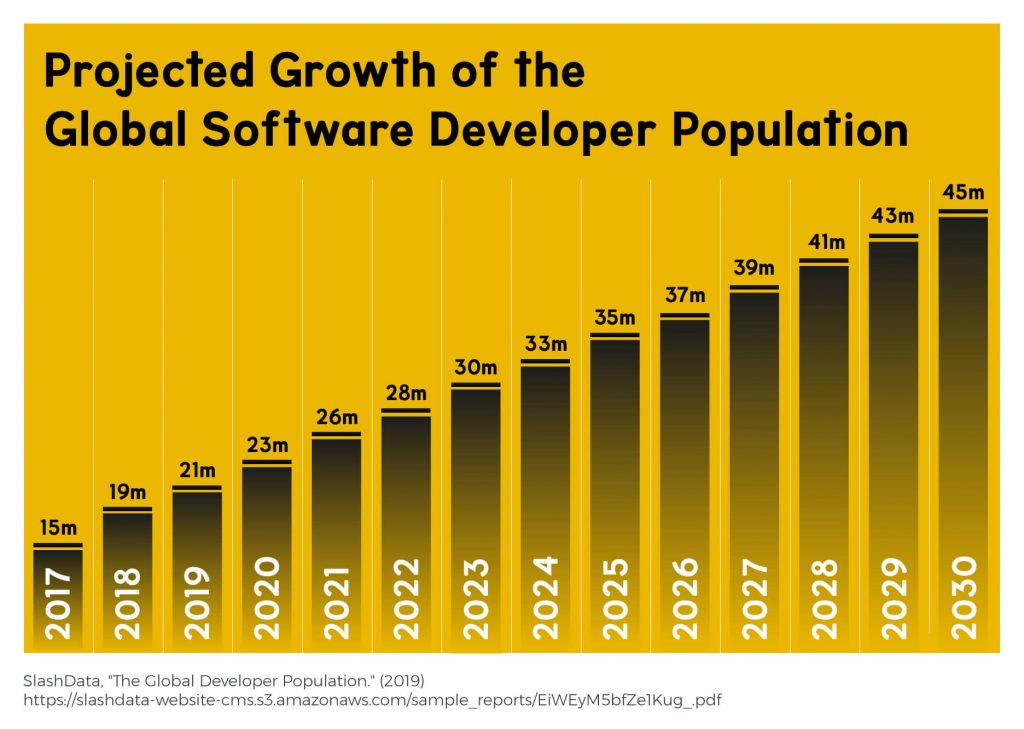

The world is changing at an unprecedented pace in technology. The demand for Data Science and Artificial Intelligence (AI) skills is growing faster than ever before. Whether you’re a recent graduate, a seasoned professional, or simply looking to upskill, now is the perfect time to hone your skills in these exciting fields.

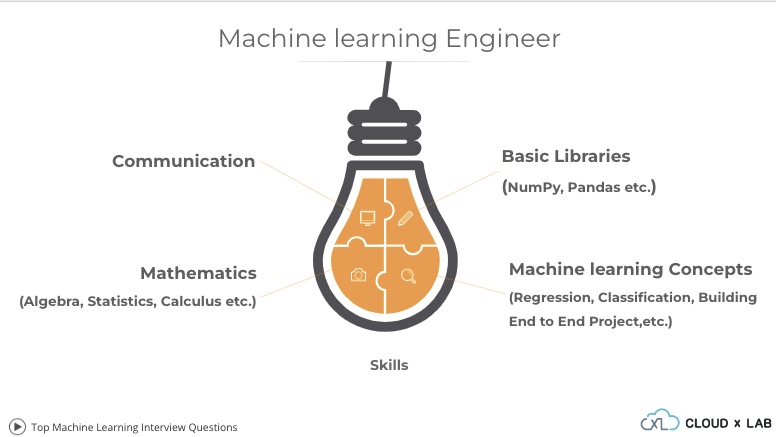

If you want to innovate or solve complex problems, you must empower yourself with the right tools and technologies today. These technologies include Machine Learning, Artificial Intelligence, Deep Learning, ChatGPT, Stable Diffusion, Data Science, Data Engineering and so much more!

Here are ten reasons why you should consider investing in Data Science and AI/ML training today.

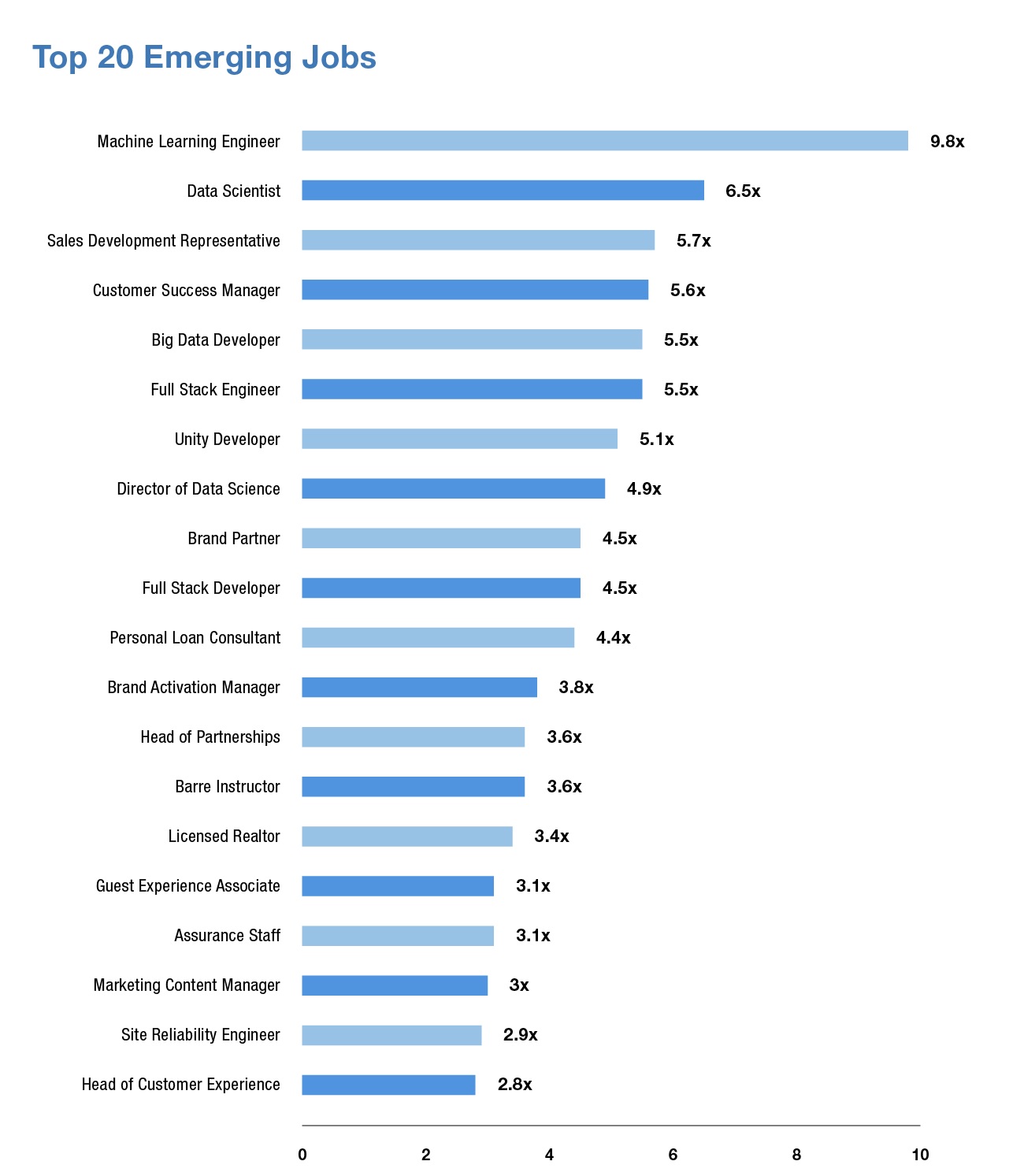

1. The Job Market is Booming

Data science and AI are among the fastest-growing fields, and the demand for professionals with these skills is expected to continue to rise. According to a recent study, the number of job postings for data scientists has increased by almost 75% over the past five years, and the demand for AI professionals is growing even faster.